ARTICLES

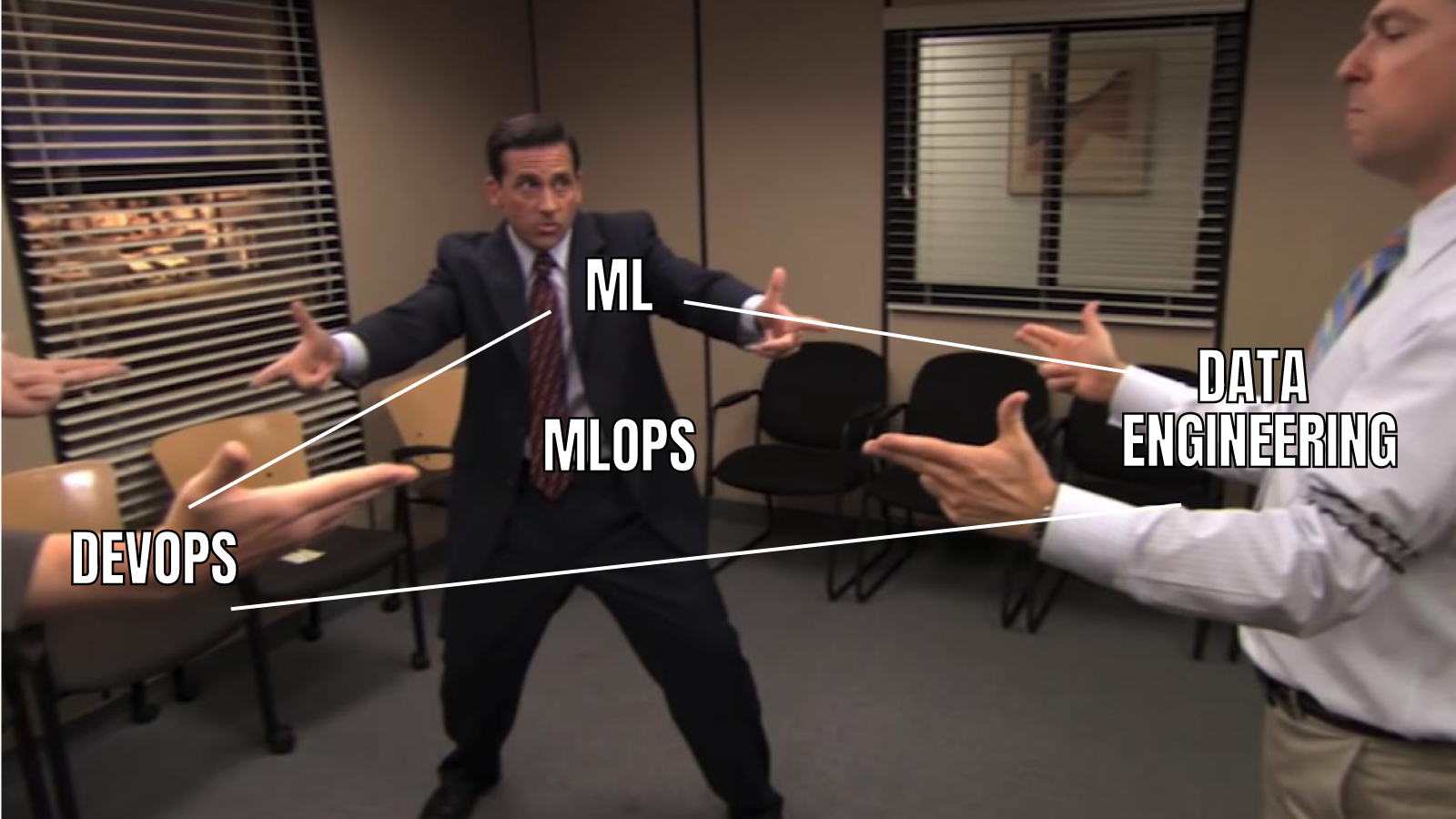

What is MLOps and do you need it? | 5 min | MLOps | Adrian Bednarz | techwithadrian on Medium

In many cases you can survive without MLOps. In my opinion though, any ML-focused, successful company must employ the principles and approaches advocated by MLOps. In this article,Adrian demonstrates when you need MLOps and when you don’t.

TLDR: immediately: no; at some point: yes

Recommend API Unified end-to-end machine learning infrastructure to generate recommendations | 12 min | ML | Katrina Ni & Aaron Maurer | Slack Engineering Blog

Slack has developed a unified framework called the Recommend API, which allows the user to bootstrap new recommendation use cases behind an API, which is easily accessible to engineers at Slack.

Behind the scenes, these recommenders reuse a common set of infrastructure for every part of the recommendation engine, such as data processing, model training, candidate generation, and monitoring. This has allowed to deliver a number of different recommendation models across the product, driving improved customer experience in a variety of contexts.

dbt modeling at scale | 11 min | Data Platform | William Nouet | Checkout Blog

In this article, William focuses on the data processing abilities in Snowflake through the use of dbt, from development to deployment and monitoring using the example of their Data Platform. The platform is structured into four main pillars: infrastructure, ingestions, processing and governance.

5 MLops predictions for 2023 | 4 min | MLOps | Meta Heuristic

- 4x more spending on ML

- Seven-figure monthly GCP/AWS billing is the new normal

- 66% of projects never make it to production

- MLOps is a technology problem, not a people problem

- Integration will still be difficult

ML Models Monitoring — Fundamentals & Practice | 14 min read | ML | Sunil Kumar | Medium

An ML Model loses its predictive performance when probability distribution of any *relevant* input feature or output or both changes (which means that system variables undergo change, i.e., IID assumption violation) -or- relationship between relevant input features and output changes (i.e., the underlying system itself changes).

Machine Learning in Production using Apache Airflow | 6 min | ML & Apache Airflow | Danylo Baibak | Towards Data Science Blog

Takeaways:

- The data validation step is very important. Correcting the trained ML model can be expensive — stop training a new ML model if the data is invalid.

- Airflow is a powerful tool that allows us to check data with minimum implementation effort.

- It can be difficult and expensive to allocate the representative test dataset. The monitoring of the error value on the training dataset is still a good alternative.

- The storage of a model’s metadata can help us to see critical changes in the nature of the model.

Netflix’s New Algorithm Offers Optimal Recommendation Lists for Users with Finite Time Budget | 4 min read | ML | Claudio Masolo | InfoQ Blog

Netflix developed a new machine learning algorithm based on reinforcement learning to create an optimal list of recommendations considering a finite time budget for the user. In a recommendation use case, often the factor of finite time to make a decision is ignored; Netflix added this dimension to its recommendation system and in general in decision problems, in addition to the relevance of the recommendations.

TOOLS & TUTORIALS

Serving Machine Learning models with Google Vertex AI | 10 min | ML | Sascha Heyer | Google Cloud - Community Blog

Companies frequently deploy their models to virtual machines (Google Compute Engine or even on-prem machines). This is something that should be avoided. Google Cloud provides a dedicated service called Vertex AI Endpoints to deploy your models.

Vertex AI Endpoints provides great flexibility paired with easy usage. You can keep it simple or get full in and customize it to your needs using custom containers.

This article covers how to put your models into production and serve requests at a large scale. Also a few workarounds surrounding the limitations of the service.

Running Kedro… everywhere? Machine Learning Pipelines on Kubeflow, Vertex AI, Azure and Airflow | 7 min | MLOps | Marcin Zabłocki | GetInData Blog

Kedro is an open-source Python framework for creating reproducible, maintainable and modular data science code. By using Kedro we address the following challenges in ML projects:

- Pipeline building

- Configuration

- Parametrization

- Datasets management

- Reproducibility

- Data processing

- Model training / evaluation

It’s about plugins for Kedro, that allow running Kedro pipelines at scale on various serverless/managed cloud services from ALL of the major cloud providers - Google Cloud Platform, AWS and Azure as well as on existing Kubernetes-based infrastructures - Kubeflow Pipelines or Apache Airflow.

DATA LIBRARY

Since this edition of DATA Pill is focused on MLOps, this ebook could not be missing from the list

MLOps: Power Up Machine Learning Process - Build Feature Stores Faster | 85 pages | MLOps | Jakub Jurczak | GetInData

- A step-by-step guide to building a Feature Store

- A comparison of the most popular Feature Stores

- An example of MLOps architecture and workflow

- How to integrate GCP with Snowflake using terraform

- Vertex.ai platform - how it works in practice

PODCAST

Tools for Deploying Data Models into Production | 1 h 20 min | AI, ML & Cloud | hosts: Jon Krohn; guests: Erik Bernhardsson | Super Data Science Podcast

Jon talks with Erik Bernhardsson, who invented Spotify’s original music recommendation system. A few of the topics they covered:

- The data problem that Erik’s company Modal Labs solved

- How Erik built Spotify’s original music recommendation system

- how to deploy a data model into the cloud

DataTube

Productionizing ML Pipelines with Airflow, Kedro, and Great Expectations | 18 min | ML | Kenten Danas | Apache Airflow

Machine Learning models can add value and insight into many projects, but they can also be challenging to put into production due to problems such as a lack of reproducibility, difficulty in maintaining integrations and sneaky data quality issues. Kedro, a framework for creating reproducible, maintainable and modular data science code, and Great Expectations, a framework for data validations are two great open-source Python tools that can address some of these problems. Both integrate seamlessly with Airflow for flexible and powerful ML pipeline orchestration.

CONFS EVENTS AND MEETUPS

Building Machine Learning pipelines with Kedro and Vertex AI on GCP | 25 October | MLOps | Michał Bryś | Free Webinar

Last reminder. Topics:

- Why we need a pipeline for machine learning models

- Kedro, an open-source Python framework for creating reproducible, maintainable and modular data science code

- Running Machine Learning pipelines on cloud using Vertex AI on the Google Cloud Platform

- Q&A session

Have any interesting content to share in the DATA Pill newsletter?

➡ Join us on GitHub